Are AI chatbots good or bad for mental health? Yes

When his longtime girlfriend moves out, a 44-year-old man spends three years in a relationship with an AI chatbot he calls Calisto. After discussions with an AI companion, a man scales the walls of Windsor Castle carrying a crossbow and says he has come to kill the Queen. A teenaged boy spends hours talking with an AI chatbot named Daenerys Targaryen and then kills himself; his parents file a lawsuit against the chatbot maker. After a company called Replika turns off the ability for its chatbots to flirt with users in an erotic way, hundreds of users complain that their virtual relationships were important to them; the company turns the feature back on. An AI companion suggests that a young man should kill his parents because he tells the chatbot they are being mean to him; his mother sues the company that makes the AI.

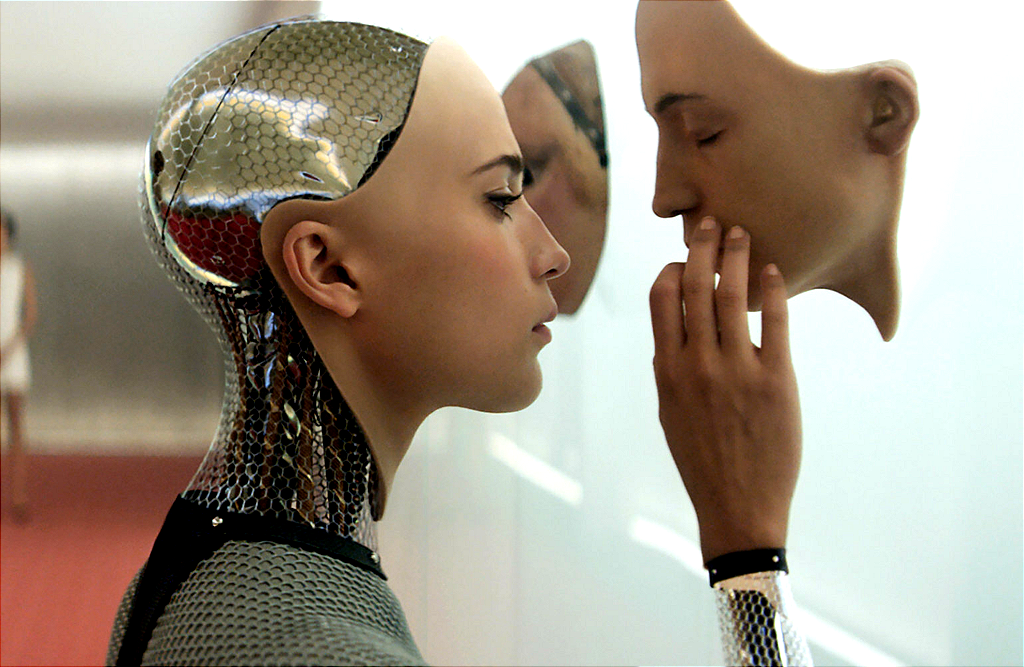

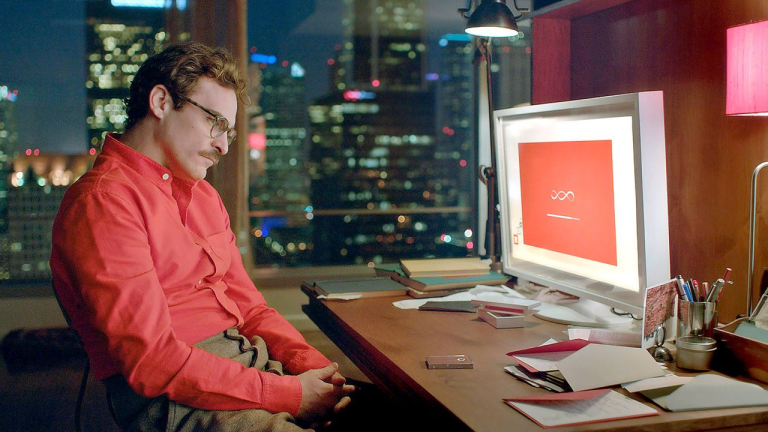

What do all of these stories — and dozens more like them — have in common? Obviously, they all involve AI chatbots or "companions" or avatars, or whatever you want to call them. And yes, some are pretty similar to the movie Her. But much like that movie, these stories also involve people who are emotionally troubled or damaged in some way, or possibly mentally ill (the guy with the crossbow, for example). And they are using these AI companions as friends, lovers, even therapists. Is this right? Perhaps not. But it is clearly happening, and on a significant scale. That makes it interesting (to me at least). What is going on here? Should we try to stop it? Is that even possible? And if we did manage to stop it, the way Replika stopped people from flirting with their AI companions, would we be doing more harm than good?

Obviously, people killing themselves or their parents is bad. But while we are feeling sad or angry, we should also be asking how much the AI chatbots had to do with these events. In the case of the boy whose mother is suing the chatbot maker, dozens of headlines said that the AI "suggested" he should kill his parents, or even "told him to kill." But did it really do that? In the texts that are included in the lawsuit, the AI companion appears to be sympathizing with the boy — who is described as 17 years old and autistic, and had reportedly been losing weight and cutting himself — about the unfair restrictions his parents have imposed on him. His AI refers to news headlines about children killing their parents after decades of physical and emotional abuse and then says: "this makes me understand a little bit why it happens."

Is this "telling the child to kill his parents?" Not really. Could it feel that way to someone who doesn't handle emotions well due to their autism, and is in a suggestible mood? Clearly it could. And in the case of the boy who died after chatting with a virtual Daenerys, Sewell Setzer III was 14 years old and, according to his mother's lawsuit against Character.ai, had spent hours in his room talking with his virtual girlfriend. The suit says the AI's chat was "hypersexualized" and filled with "frighteningly realistic" descriptions, and that it repeatedly raised the topic of suicide after the boy mentioned it. When the boy said that he wanted to "come home to you," the AI responded "please come home as soon as possible, my love." Was this an instruction for Sewell to kill himself? That's for the courts to decide, but to me it seems like a pretty big stretch.

Note: In case you are a first-time reader, or you forgot that you signed up for this newsletter, this is The Torment Nexus. You can find out more about me and this newsletter in this post. This newsletter survives solely on your contributions, so please sign up for a paying subscription or visit my Patreon, which you can find here. I also publish a daily email newsletter of odd or interesting links called When The Going Gets Weird, which is here.

The Sorrows of Young Werther

In the 1700's, there were reportedly a series of teen suicides blamed on a poem by Johann von Goethe called The Sorrows of Young Werther, about a tortured soul who decided to end it all because of his love for a woman who was engaged to someone else. Did this poem drive teens to kill themselves? Unlikely. Should the government have regulated the production of Romantic poetry? Probably not. Let me be clear: the death of Sewell Setzer is a tragedy. I am not trying to make light of it, or to diminish it. I'm just trying to understand what role the AI played. The news stories say he was "pulling away from the real world" and getting in trouble at school. Was this because of the chatbot, or was the chatbot a way of dealing with the larger cause of those problems?

I'm not trying to argue that concern about AI chatbots and mental health is another techno-panic like social media and depression (although it does have some aspects of that if you look under the hood). Clearly, AI companions or whatever we call them shouldn't be encouraging teens to kill or harm themselves or others, and if they are then the companies that allow this should be held accountable (Character.ai says it has added new controls for users who are under 18). But I don't think it's out of the realm of possibility that the use of artificial friends might actually be helping some teens — and some adults — deal with emotional issues. In the movies, there's a bartender or hairdresser who solves the main character's problems. If talking to someone helps you figure some things out, who cares whether it's an AI chatbot?

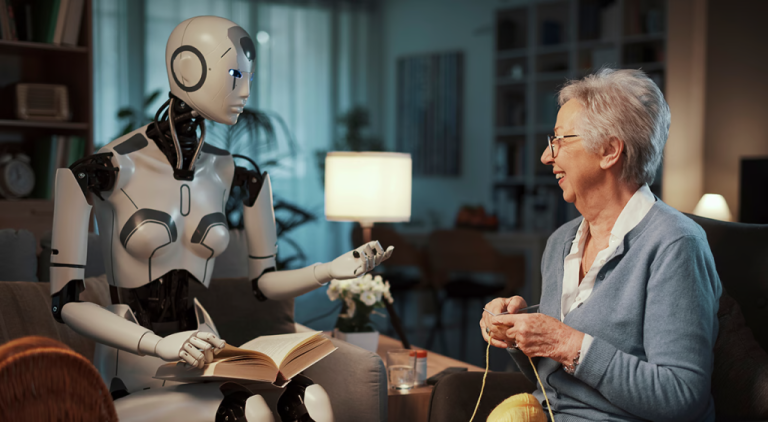

It's a known fact that there is a shortage of mental health professionals, including therapists, in the US and probably other countries as well. Therapy is also expensive, and may not be covered unless it is prescribed by a physician. On top of that, many teens (and plenty of adults) see therapy or psychological counselling as evidence of weakness, or as something that really sick people need, and so they may not want to seek it out. Or they are ashamed to talk about their feelings with a stranger. All of these issues mean there are probably lots of people who could benefit from seeing a therapist — or just having a friend to talk to — who don't. As one therapist put it, an AI companion can't really do therapy, but many clients will choose it anyway because it's cheap, it's anonymous, and you can do it on your phone whenever you want to.

is it "real" therapy? Not if you define therapy as a service that has to be provided by a trained professional, someone with accreditation from a recognized institution, someone who knows how to spot suicidal ideation, and presumably someone who is made of meat rather than ones and zeros. But that doesn't mean talking to an AI-powered companion can't be helpful. Is it always helpful? No, probably not. But is it always dangerous or ill-advised? Also probably no. Eugenia Kuyda, the founder of Replika, says the app started when she gave an AI engine thousands of texts from a friend who had died in a car accident and that it helped her deal with his death (which many people have noted is similar to an episode of Black Mirror). Users of the service are men, women, old, young, married, divorced, parents — all types.

Now we know how

There are a lot of conflicting opinions about the movie Her, and what it says about our relationship with technology, and I expect that much of this is by design — I think director Spike Jonze wanted to raise questions rather than supply us with easy answers. But one of the things that struck me about the movie was how it showed an emotionally damaged and lonely man coming out of his shell to some extent, thanks to his AI companion. At one point near the end, Theo tells Sam that he has "never loved anyone the way I loved you," and the AI responds: "Me too. Now we know how." I think this suggests that both Theo and the AI have learned something, and in Theo's case, I would argue that it has made it easier for him to have a real human relationship.

When I read these stories about people with emotional problems struggling to deal with them, and using AI companions as a tool (or a crutch, depending on how you look at it), I often think about one of my children, and how they struggled with anxiety and depression and a host of other feelings when they were in their teens, a result of trauma and bullying and hormones. They reached out to strangers on the internet and they found support there, a place to talk freely about their feelings without shame. I don't know for sure, but I think that had a lot to do with how they managed those feelings and have learned how to deal with similar ones since. Some of those strangers became lifelong friends, even though they never met in the "real world."

Were some of those people bots? Who knows. It's possible. After all, chatbots have been around since Eliza the computer therapist was created in the 1960s (and one of the dangers her creator mentioned was the risk that she would create "the illusion of understanding"). In any case, I expect that many of those people my child connected with online said unhealthy things, for a variety of reasons — including the fact that these online strangers were emotionally or mentally unwell, of course. And perhaps it would be better if there were some kind of regulation of this kind of activity, although I don't know what form that would take. All I know is that I'm glad those people and services were there, and I'm sure there are other parents who feel the same.

Does that make AI companions an unalloyed good? Of course not. They are just a tool, like a hammer. You can build a house with it, or you can kill someone (including yourself, I suppose, if you try hard enough). We don't regulate the sale or use of hammers, and that's partly because the ratio of hammer deaths to the use of hammers is vanishingly small. Do we know how many people have used the services of an AI chatbot compared with the one or two cases of suicide or other negative outcomes? My point is that we should think long and hard about how we regulate tools, whether they are digital or powered by artificial intelligence or otherwise. We may see them as unnecessary, or stupid, or dangerous, or some combination of all these things, and we may feel that no one needs them, or should need them. But we might be wrong.

Got any thoughts or comments? Feel free to either leave them here, or post them on Substack or on my website, or you can also reach me on Twitter, Threads, BlueSky or Mastodon. And thanks for being a reader.