Self-driving cars are an unambiguous social good

Before we get started, let's agree that Elon Musk's promises about full self-driving on the Tesla have been figments of his ketamine-addled imagination, if not an outright fraud. Musk first promised FSD in 2016, almost a full decade ago, and it is barely any closer now. His then-Twitter account almost 10 years ago was full of hype about features like "Summon," where a Tesla owner across the city could click a button in the app and their car would autonomously leave the garage and drive across town, something that still hasn't arrived. Is it because Musk refuses to use LiDAR, which literally every other self-driving car maker uses, and has stuck to trying to get cameras and algorithms to do it alone? Possibly. Regardless, the fact is that a Tesla still has problems making it onto highway exits or detecting when lanes are closed, and it routinely cuts other drivers off. In other words, Tesla self-driving is a pale imitation of what Musk has been promising for years, to the point where there are multiple class-action lawsuits about it.

That said, however, I think there's ample evidence that self-driving cars — even the somewhat flawed ones we have now — are an unambiguous social good. They are so much better than cars driven by human beings that it doesn't seem fair to even compare them. It's like arguing that toasters are better than jamming a piece of bread on a stick and holding it over a fire, or that anaesthesia is better than telling someone to bite a bullet before you operate. If it were possible to flick a switch and make all cars self-driving, it would be incumbent on us as a society to flick that switch right now. To get a sense of why his is the case, Waymo — Google's self-driving car startup — recently released statistics on the accident rate of its cars, of which there are currently more than 2,500 in five cities. As of June, Waymo cars had driven almost 100 million miles and had 90 percent fewer crashes causing serious injury, and 90 percent fewer incidents involving pedestrians (Tesla also reports accidents but with much less detail).

Before anyone jumps on their keyboard to call bullshit, Waymo admits that comparing human-driven car accident rates with those driven by AI is "challenging," as the company put it. For one thing — as a number of people have pointed out — even 100 million miles of driving is a tiny drop compared with human-driven trips, which according to some estimates are around 3 trillion miles per year in the US. Also, the way crashes are typically reported for human beings is the police are either called to an accident or a crash report is filed at a police station. According to the National Highway Traffic Safety Administration, about 60 percent of human car crashes involving property damage and 32 percent of injury crashes are not reported to police at all. Self-driving car companies like Waymo, however, are required to report any incident that results in any property damage whatsoever, no matter how small.

The other criticism that I've seen made about Waymo and its crash statistics is that the lower rates of accidents aren't all that surprising because Waymo cars only drive in the downtown areas of five cities, and they are primarily driving short trips on well-lit city streets at low speeds, with plenty of traffic signals, gridlock, etc. In other words, some argue that their numbers are by definition going to be lower than human drivers who are speeding down interstate highways at hundreds of miles per hour, in the dark, etc. (Waymo says it is starting to offer highway driving). Here's how Waymo described it in their report:

All streets within a city are not equally challenging. If Waymo drives more frequently in more challenging parts of the city that have higher crash rates, it may affect crash rates compared to quieter areas. The benchmarks reported by Scanlon et al. are at a city level, not for specific streets or areas. The human benchmarks shown on this data hub were adjusted using a method described by Chen et al. (2024) that models the effect of spatial distribution on crash risk. The methodology adjusts the city-level benchmarks to account for the unique driving distribution of the Waymo driving. The result of the reweighting method is human benchmarks that are more representative of the areas of the city Waymo drives in the most.

Note: In case you are a first-time reader, or you forgot that you signed up for this newsletter, this is The Torment Nexus. You can find out more about me and this newsletter in this post. This newsletter survives solely on your contributions, so please sign up for a paying subscription or visit my Patreon, which you can find here. I also publish a daily email newsletter of odd or interesting links called When The Going Gets Weird, which is here.

Human drivers suck

Waymo's report just confirmed something I've thought for some time now, which is that even if cars like Waymo's routinely killed dozens of people every week, it would still be better than having human beings drive cars (Full disclosure: I was involved in a serious accident when a drunk driver pulled out in front of me at night with no headlights; I spent six weeks in hospital). In addition to the Waymo report, I decided to write about it because of a New York Times op-ed in which a neurosurgeon named Jonathan Slotkin wrote that self-driving cars are a "public health imperative." In medical research, he writes, we stop when there is harm, but we also stop when a treatment is working so well that it would be considered unethical to continue giving anyone a placebo:

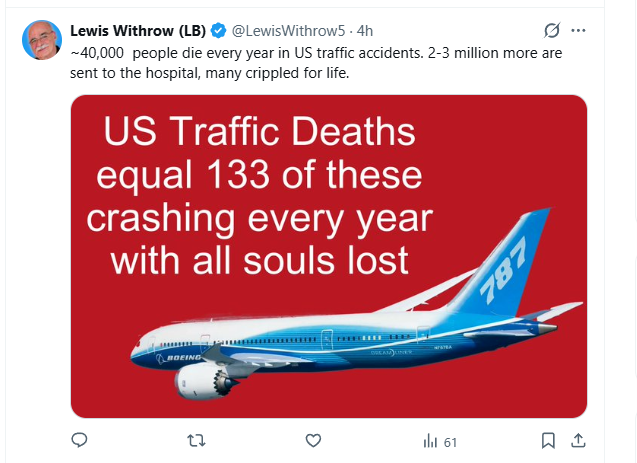

There’s a public health imperative to quickly expand the adoption of autonomous vehicles. More than 39,000 Americans died in motor vehicle crashes last year, more than homicide, plane crashes and natural disasters combined. Crashes are the No. 2 cause of death for children and young adults. But death is only part of the story. These crashes are also the leading cause of spinal cord injury. We surgeons see the aftermath of the 10,000 crash victims that come to emergency rooms every day. The combined economic and quality-of-life toll exceeds $1 trillion annually, more than the entire U.S. military or Medicare budget.

Slotkin notes that Waymo cars are far from perfect — a passenger heading to the airport was recently stuck inside a Waymo that looped a parking lot roundabout for five minutes, and cops in the Bay Area pulled over a Waymo for making an illegal U-turn (since there was no human driver, a ticket couldn’t be issued, the police department noted, because "citation books don’t have a box for robot”). Some studies show self-driving cars tend to be worse in low light and worse at turning. Waymo cars have also been involved in serious accidents: two involving fatalities and one involving serious injury. An important caveat, however, is that in all three cases, those accidents were caused by human-driven vehicles — in one case, a high-speed crash pushed another car into a stopped Waymo; in another, a person who ran a red light hit a Waymo and several other vehicles before hitting and injuring a pedestrian, and in a third, a Waymo was rear-ended by a motorcyclist, who was then killed by a hit-and-run driver.

Despite all these benefits, there are number of cities and regions where officials are trying to ban autonomous cars, and others where politicians have been dragging their feet and throwing up roadblocks, including Washington, D.C. and Boston, which is considering mandating that every car have a "human safety operator" in it. Among the concerns that some people have is that autonomous cars will pull people away from other more environmentally friendly alternatives, including public transit, and that any funding or regulatory changes should be made in the direction of public transit rather than adding more cars to the streets, autonomous or otherwise. Slotkin agrees in his op-ed that if self-driving cars primarily pull riders from trains and buses, which are already exceedingly safe, there will be far less of a benefit. Among other things, he says, that means cities and regions need to start doing the planning to address the way that self-driving will "threaten the livelihoods of millions of commercial drivers."

Some of the opposition to autonomous cars no doubt comes from concerns about safety, especially after a Cruise car — part of a General Motors self-driving project — drove over a woman who was thrown into the path of the self-driving car in 2023 and dragged her for 20 feet before stopping (she was not seriously injured). GM shut down the Cruise project in the wake of that and other incidents, after regulators forced it to downsize its fleet and meet other requirements. Some self-driving car critics are concerned about the impact on transit, and others — as Slotkin mentions — about how it will affect the millions of people driving cabs, as well as car services like Uber and other companies, not to mention delivery vehicles. This is also a concern in China, according to a recent piece in the Financial Times, where the robotaxi market is expected to grow to be worth $47 billion by 2035, with a projected fleet of two million cars.

An overwhelming net positive

Some of the opposition, in San Francisco at least, has taken the form of civil disobedience: as the Globe and Mail noted in a piece in 2023, after a group of grassroots protesters called “Safe Street Rebels” discovered they could temporarily disable AVs by planting orange traffic cones on the hoods of robotaxis, they held “the week of the cone” in June to protest against Waymo and Cruise. San Francisco's first responders also opposed the decision to allow robotaxis unfettered access to the city's streets, arguing that robotaxis were a menace because they get in the way of emergency personnel responding to fires, etc. The San Francisco Fire Department said it had identified more than 55 incidents when robotaxis interfered with their efforts. Fire Chief Jeanine Nicholson went on the record claiming the technology is “not ready for prime time.”

I suspect others are critical of the move to driverless cars because they see it as part of the headlong rush towards artificial intelligence, and they are either skeptical of the abilities of current AI systems or they are pessimistic about the impact of AI on society. I think it's fair to be skeptical of all that, but still believe that self-driving cars, in whatever form we decide to implement them, would be a net positive for society as a whole. Imagine a safe and reasonably priced way for the disabled to get around, or for children or the elderly to travel, without having to figure out the intricacies of bus or train travel. But the biggest pitch for autonomous cars is the most obvious: what if we didn't kill a 9/11 equivalent number of people every month? What if 40,000 more people — five times more than were killed in both the Iraq and Afganistan conflicts put together — were still alive at the end of the year instead of becoming a statistic?

I think it's worth pointing out that self-driving cars are likely to be safer for some than even taking an Uber or a regular taxi: as the NYT has noted, Uber and Lyft have made headlines over reports of sexual assaults. An investigation found that Uber had received a report of sexual assault or misconduct in the United States almost every eight minutes on average between 2017 and 2022. In Kansas, a Lyft driver was charged last year with the rape of a teenager (Uber and Lyft spokespeople say more than 99 percent of their trips end without any safety reports). And what are the downsides of either Waymo or Zoox, the boxy Amazon robotaxi service that just launched in San Francisco? Here's about all the New York Times was able to come up with in a recent piece:

For many drivers who enjoy slightly exceeding the speed limit, it’s not fun to be stuck behind a Waymo. The robot cars are conservative about obeying speed limits and braking to avoid collisions. When more of these cars hit public roads, they will almost certainly add to drivers’ frustrations. And because self-driving cars err on the side of caution, they sometimes won’t pick you up where you want them to. Although Uber or Lyft drivers are often willing to illegally double-park in front of your home to pick you up, a Waymo or Zoox will occasionally park in an area deemed safe even if it’s farther away, which may not be ideal if you’re carrying luggage.

Oh, and a Waymo car allegedly killed a beloved neighborhood cat (and also appears to have hit a dog). That is obviously unfortunate, and I am very pro-cat and also pro-dog, but I am also pro-human, and I agree with Dr. Slotkin and others who believe that implementing self-driving cars, trucks, buses and other vehicles everywhere we possibly can as soon as we possibly can is not a technology issue or a political issue but a health-care and human survival issue. If you believe that I'm wrong, I would love to hear why. Feel free to email me at mathew@mathewingram.com, or respond on my website or on BlueSky or Threads or Mastodon or anywhere else I have a social account.